…

1305分,只拿了总分一半不到。厮混了半年水平竟如此半吊子,心中还是失落更多,但是步伐不会停下。有懊恼,因为搜索引擎使用方式不恰当而和400分失之交臂。

Week1 Base全家福 R1k0RE1OWldHRTNFSU5SVkc1QkRLTlpXR1VaVENOUlRHTVlETVJCV0dVMlVNTlpVR01ZREtSUlVIQTJET01aVUdSQ0RHTVpWSVlaVEVNWlFHTVpER01KWElRPT09PT09

解b64,解b32,解b16

其实使用basecrack就能实现自动base机

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 python basecrack.py --m python basecrack.py -h [FOR HELP] [> ] Enter Encoded Base: R1k0RE1OWldHRTNFSU5SVkc1QkRLTlpXR1VaVENOUlRHTVlETVJCV0dVMlVNTlpVR01ZREtSUlVIQTJET01aVUdSQ0RHTVpWSVlaVEVNWlFHTVpER01KWElRPT09PT09 [-] Iteration: 1 [-] Heuristic Found Encoding To Be: Base64 [-] Decoding as Base64: GY4DMNZWGE3EINRVG5BDKNZWGUZTCNRTGMYDMRBWGU2UMNZUGMYDKRRUHA2DOMZUGRCDGMZVIYZTEMZQGMZDGMJXIQ====== {{<<======================================================================>>}} [-] Iteration: 2 [-] Heuristic Found Encoding To Be: Base32 [-] Decoding as Base32: 6867616D657B57653163306D655F74305F4847344D335F323032317D {{<<======================================================================>>}} [-] Iteration: 3 [-] Heuristic Found Encoding To Be: Base16 [-] Decoding as Base16: hgame{We1c0me_t0_HG4M3_2021} {{<<======================================================================>>}} [-] Total Iterations: 3 [-] Encoding Pattern: Base64 -> Base32 -> Base16 [-] Magic Decode Finished With Result: hgame{We1c0me_t0_HG4M3_2021} [-] Finished in 0.0023 seconds

hgame{We1c0me_t0_HG4M3_2021}

不起眼压缩包的养成方法 因为压缩算法的问题被卡了一整天。一道非常简单的题。

点开地址,一张图片,右键保存下来,直接binwalk出压缩包,需要解压密码,旁边注释是8位数字,爆破之

PW:70415155,得到plain.zip和NO PASSWORD.txt

高能来了,一看就知道是明文攻击,使用archpr折腾了n久一直报错,而且我也知道是压缩方式/软件之类的和出题人不一样,当时想着是知道出题人使用的压缩软件就能出来,后来各种查询资料,发现一个师傅做明文攻击的wp用的这种方式:把原来压缩包里的NO PASSWORD.txt删除,再把已经有的NO PASSWORD.txt扔进去,结果:失败。甚至怀疑是不是错了。看txt的内容,以为是0宽,扔进去也不是。毕竟第一周的签到以外,咋能难到哪里去,肯定不是。不能高估了题目。在bandzip压缩的时候看到的压缩方式,有个storage,txt里也提到了这个,使用storage方式压缩制造明文档案即可。

PW:C8uvP$DP,得到flag.zip,这次总该是伪加密了吧。伪加密解之,得到一串字符,其实不用伪加密也可以,zip直接丢winhex就能看到flag.txt里的内容,是一堆看起来像是utf-8编码的东西,但是解了发现并不是,是html编码,解码之。

hgame{2IP_is_Usefu1_and_Me9umi_i5_W0r1d}

Galaxy 流量分析,wireshark打开,搜索http协议,找到两个GET方法,随便追踪一个HTTP流,发现访问了一张百度图片,那么导出对象为http,选中galaxy.png,save,得到一张图片,扯进kali发现是打不开的,那必然是改宽高。一改就行了。

hgame{Wh4t_A_W0nderfu1_Wa11paper}

Word Re:MASTER 一个压缩包,解压出2个docx:first.docx、maimai.docx,都丢进winhex,在发现maimai.docx是加了密的文档,尾部还有提示。所以直接把first.docx改后缀为zip找到一个password.xml,扯进winhex发现brainfuck,解得maimai.docx的文档密码:

DOYOUKNOWHIDDEN?

解开,直接就能发现一堆空格(因为我的word默认是显示所有隐藏的东西的),全选之后清除格式,然后复制,word里有张图片内容和雪有关(喜欢!雪!真实的魔法),那必然是snow隐写,把这堆空格放进flag.txt

hgame{Cha11en9e_Whit3_P4ND0R4_P4R4D0XXX}

至此,第一周misc题目AK,wp完成时间2021年1月31日15:03:38

Week2 拿了一血,有点小高兴。

附件zip一个,解压出F5.7z和Matryshka.jpg,图片的内容是一个套娃(字面意思),但是题目本身也算套娃。压缩包带密码,密码肯定就是从图片来了,肯定是F5隐写,而且binwalk的时候可以看到一行带copyright的串,也明示了是F5隐写,F5隐写的密码从右键属性的详细信息得到。

!LyJJ9bi&M7E72*JyD

那么

1 java Extract Matryoshka.jpg -p '!LyJJ9bi&M7E72*JyD'

得到output.txt的内容为

e@317S*p1A4bIYIs1M

从7z解压出Steghide.7z和01.jpg,图片是张二维码的1/4,一看就知道最后要拼起来,肯定是Steghide隐写了,密码都是右键得到。

A7SL9nHRJXLh@$EbE8

1 2 3 steghide extract 01.jpg Enter passphrase: wrote extracted data to "pwd.txt".

pwd.txt的内容为

u0!FO4JUhl5!L55%$&

从7z解压出Outguess.7z和02.jpg,那就outguess咯。

1 outguess -r 02.jpg -t out.txt -k z0GFieYAee%gdf0%lF

out.txt的内容为

@UjXL93044V5zl2ZKI

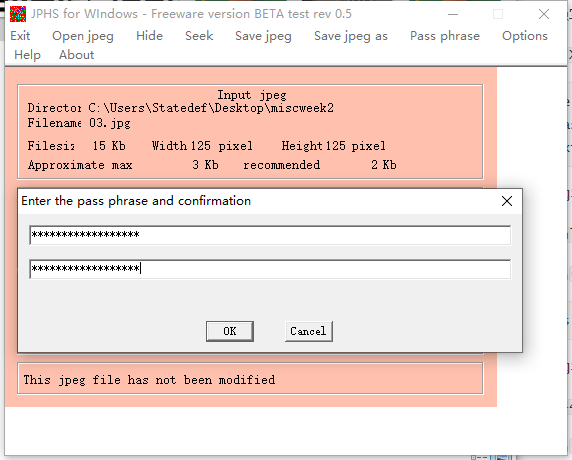

从7z解压出JPHS.7z和03.jpg,那就JPHS咯。

两个空都要填上rFQmRoT5lze@4X4^@0

保存为01.txt,其内容为xSRejK1^Z1Cp9M!z@H

解压得到04.jpg

使用画图拼合4张裂开的二维码扫码即可得到flag

hgame{Taowa_is_N0T_g00d_but_T001s_is_Useful}

Telegraph 小题大做了。直接扔audacity,查看频谱,发现几个大字:850Hz。第一感觉竟然是去年roarCTF那个FM,甚至去配了gqrx-sdr去分析,啥也没得到。搞了半天浪费了特别多时间。应该是走进死胡同了。

一看文件名是中文电码,解码后得到:带通滤波器。搜了一通之后啥也没得到。这个hint其实有些误导

最后面简单粗暴的解法是:audacity直接播放音频,一段音乐过后能够听到摩斯电码。鼓捣一下频谱能够发现确实有摩斯电码,直接解码就能得到flag,后知后觉才知道850Hz是告诉你摩斯电码的大概位置。

YOURFLAGIS4G00DS0NGBUTN0T4G00DMAN039310KI

flag:hgame{4G00DS0NGBUTN0T4G00DMAN039310KI}

Hallucigenia 一张图片,丢winhex,屁股正常,stegsolve查看lsb通道,正常,更改滤镜,发现一张二维码,扫描得到

1 gmBCrkRORUkAAAAA+jrgsWajaq0BeC3IQhCEIQhCKZw1MxTzSlNKnmJpivW9IHVPrTjvkkuI3sP7bWAEdIHWCbDsGsRkZ9IUJC9AhfZFbpqrmZBtI+ZvptWC/KCPrL0gFeRPOcI2WyqjndfUWlNj+dgWpe1qSTEcdurXzMRAc5EihsEflmIN8RzuguWq61JWRQpSI51/KHHT/6/ztPZJ33SSKbieTa1C5koONbLcf9aYmsVh7RW6p3SpASnUSb3JuSvpUBKxscbyBjiOpOTq8jcdRsx5/IndXw3VgJV6iO1+6jl4gjVpWouViO6ih9ZmybSPkhaqyNUxVXpV5cYU+Xx5sQTfKystDLipmqaMhxIcgvplLqF/LWZzIS5PvwbqOvrSlNHVEYchCEIQISICSZJijwu50rRQHDyUpaF0y///p6FEDCCDFsuW7YFoVEFEST0BAACLgLOrAAAAAggUAAAAtAAAAFJESEkNAAAAChoKDUdOUIk=

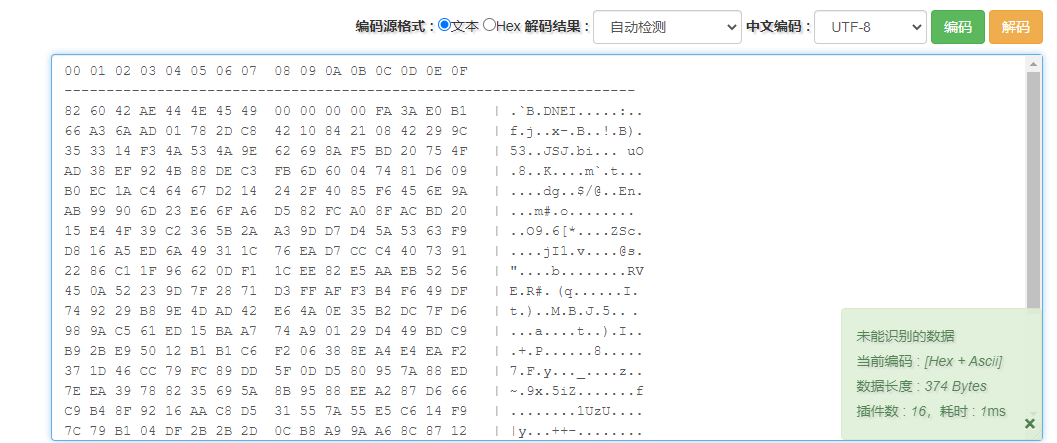

一看就b64,转换得到一个

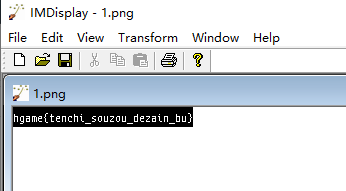

注意82 60,是png的屁股的倒序。而且是按照字节单位来倒序的,导致我的逆序脚本不好使了,当时又折腾了好些时间打算写个脚本,但是菜鸡如我,不会写。当机立断手搓,直接反过来手抄图片,粘贴进010editor保存为1.png,是个镜像反转的flag,imagemagick伺候

Transform→flop得到

hgame{tenchi_souzou_dezain_bu}

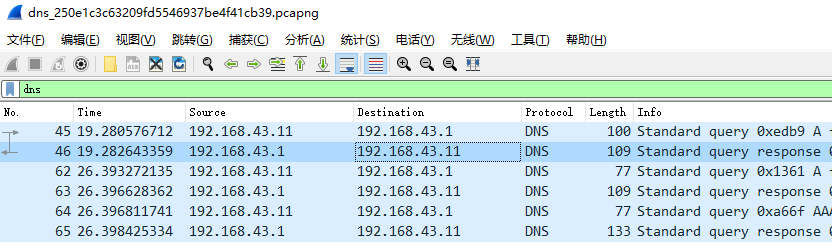

DNS 这题又学到了流量分析的一个题型。很不错。

第一次遇到DNS流量分析,wireshark打开,一通操作啥也没出来。稍微查了下DNS流量分析之类的东西,学习了一些关于DNS的知识,那么

过滤dns协议,得到以下6条

找response类型的流量,点开Domain Name System(response)下的Answers,按理来说会有TXT,内容是flag,但是必然没这么明显的原题。在唯一一个UDP流量里面找到了服务器ip的端口,尝试curl和nc访问,拒绝了。应该不是这么做的。

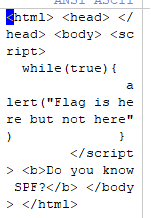

binwalk一下,得到一个html,拿出来扔winhex发现hint:

Do you know SPF?

搜索关键词DNS SPF,找到这篇文章。https://blog.csdn.net/weixin_34232617/article/details/91699242

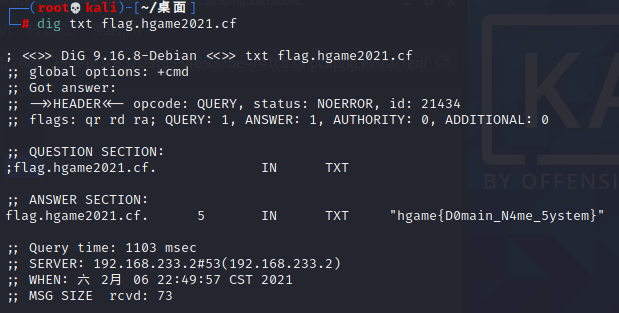

我们需要查询SPF记录,那么使用dig指令,域名是流量中的flag.hgame2021.cf

1 dig txt flag.hgame2021.cf

hgame{D0main_N4me_5ystem}

20:00:00~21:33:12,一个半多小时ak了misc,在misc的学习路途上也算有些进步了吧。

Week 3 week3巨难,爆了零。

A R K 第一次遇到。可见我的见识是多么浅薄。拿到流量包操作了一通啥也没出来,这次不像上周有那么好的资料可查了,直接放弃了

FTP流量分析和TLS流量分析,从FTP流量入手,选中FTP-DATA追踪TCP流,另存为可得到ssl.log

导入后可解密TLS流量。

导出为HTTP对象,结合hint明⽇⽅⾈是⼀款塔防游戏,可以将可部署单位放置在场地中。并且具有⾃律功能,可以记录部署的 操作。

getbattleReplay在battleStart之前,结合其翻译不难得知这个就是⾃律的数据,且第⼆个才是服 务端返回给客⼾端的数据,根据 Content-type 将其导出为 json

里面有一串b64,解得一个zip文件,解压提示文件损坏,查看头是504B0506,将其修改为504B0304即可解压出default_entry,都是json代码

抄脚本

1 2 3 4 5 6 7 8 9 10 11 12 import json5import numpy as npfrom PIL import Imagedef json2img (src: str , o: str ):flagJson = json5.loads(open (src, 'r' ).read()) resImg = Image.new('RGB' , (100 ,100 ), (255 ,255 ,255 )) resArr = np.array(resImg) for dusk in flagJson['journal' ]['logs' ]:resArr[dusk['pos' ]['row' ]][dusk['pos' ]['col' ]] = (0 ,0 ,0 ) resImg = Image.fromarray(resArr).convert('RGB' ) resImg.save(o) json2img('default_entry.json' , 'res.png' )

扫描二维码

hgame{Did_y0u_ge7_Dusk?}

A R C 附件弄下来,8558.png里的内容抄下来解b85解b58后啥也没得到,对付压缩包BVenc(10001540).7z,把10001540拿去加密成BV号作为解压密码,错了,还有个tables.ttf字体文件,搞不懂有啥用,放弃了。后来更新了hint,也懒得看了直接被搞自闭。

看了wp才知道解完b85后那一串是一个表,而且还要学习一下BV号的原理才能写出对应的编码脚本。

还是抄。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 table='h8btxsWpHnJEj1aL5G3gBuMTKNPAwcF4fZodR9XQ7DSUVm2yCkr6zqiveY' tr={} for i in range (58 ):tr[table[i]]=i s=[11 ,10 ,3 ,8 ,4 ,6 ] xor=177451812 add=8728348608 def dec (x ):r=0 for i in range (6 ):r+=tr[x[s[i]]]*58 **i return (r-add)^xordef enc (x ):x=(x^xor)+add r=list ('BV1 4 1 7 ' ) for i in range (6 ):r[s[i]]=table[x//58 **i%58 ] return '' .join(r)print (enc(10001540 ))

解压打开arc.mkv搜一下视频里的问题,答案是42,视频末尾有两行字符串#)+F7IIMEH:?Injiikffi和pwbvmpoakiscqdobil,再看fragment.txt都是无意义的东西,带有空格,结合hint⽤了某种ROT的范围,但是位移不⼀样。词频分析是个好东西,别忘了视频⾥的问题。

字符范围是 ROT47 的,但是位移换成了 42 (实际上出题时是 52,这样再转回来就是 42)

解密后只有前两⾏是有⽤的信息,先看第⼀⾏: Flag is not here, but I write it because you may need more words to analysis what encoding the line1 is. 得知视频⾥第⼀⾏也是和 fragment.txt ⼀样 #)+F7IIMEH:?Injiikffi MSUpasswordis:6557225 ⽤所给的软件和 MSU 搜索可以找到:https://www.compression.ru/video/stego_video/index_en.htm l 安装提供软件,将插件导⼊ plugins32 ⽂件夹,启动 VirtualDub.exe ,导⼊视频:

Video -> Filters -> Add -> MSU StegoVideo 1.0,弹出 MSU StegoVideo 插件界⾯。选择 Extract file from video,并填好密码和分离出的⽂件的路径:

OK -> OK,回到主界⾯,进度条拉到视频最开始处,File -> Save Video,随便选⼀下输出路径,得到隐 写的 txt ⽂件:

1 2 3 arc.hgame2021.cf Hikari Tairitsu

打开⽹站,输⼊⽤⼾名和密码:

继续去看第⼆⾏:

1 For line2, Liki has told you what it is, and Akira is necessary to do it.

有的东西可以参考Crypto WEEK-1 第⼀题。

Crypto WEEK-1 ⾥⽤到 Liki 的只有维吉尼亚密码,所以是 Vigenere-Akira:

pwbvmpoakiscqdobil

pmtempestissimobyd

/ 不是可输⼊的意思,是⽹站路径 所以访问 https://arc.hgame2021.cf/pmtempestissimobyd 得 flag:

hgame{Y0u_Find_Pur3_Mem0ry}

accuracy 新上的题,直接就没打。也幸亏没打,看了wp才知道是机器学习,学个屁

直接贴wp

⾸先有两个附件,⼀个 zip 包,⾥⾯装了⼀万多张图⽚,每张图⽚是⿊⽩图像,⻓宽 28×28 ,如果有接触过 MNIST (作为校内 Hint 放出过)的同学可能会发现,数字部分实际上很像,而字⺟部分也极为相似,另⼀ 个附件是⼀个 csv ⽂件,⾏数不是重点,⼀⾏代表⼀个记录,总共 785 列,实际上,不算上第⼀列的 label ,只 有 784 列, $28×28=784$ ,并且随机挑⼏列出来查看,数据最⼤不过 255 ,最小不低于 0 ,很可能是 28*28 的 图像数据的记录,这道题的做法⼗分简单,把压缩包⾥所有的图⽚的数字都识别出来,按顺序组成字符串,粘 贴到题⽬给的⽹址中提交即可。为了降低难度,实际上压缩包⾥的图⽚都是从 .csv ⽂件中提取出来的,只 不过为了防⽌暴⼒匹配,所有的⾮ 0 部分都被减了 1 ,官⽅解法为训练⼀个神经⽹络进⾏识别,由于提交时 有要求准确率要在 95% 以上,因此训练⼀个⼀般的模型即可, 以下给出数据分析及训练脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 import numpy as npimport pandas as pdimport matplotlib.pyplot as pltimport tensorflow as tfimport seaborn as snsimport osfrom sklearn.preprocessing import MinMaxScalerfrom sklearn.model_selection import train_test_splitsns.set () gpus = tf.config.experimental.list_physical_devices(device_type='GPU' ) for gpu in gpus: tf.config.experimental.set_memory_growth(gpu, True ) os.environ['CUDA_VISIBLE_DEVICES' ]='0' dataset_path = "full_Hex.csv" dataset = pd.read_csv(dataset_path).astype('float32' ) X = dataset.drop('label' ,axis = 1 ) y = dataset['label' ] print ("shape:" ,X.shape)print ("culoms count:" ,len (X.iloc[1 ]))print ("784 = 28X28" )from sklearn.utils import shuffleX_shuffle = shuffle(X) plt.figure(figsize = (12 ,10 )) row, colums = 4 , 4 for i in range (16 ): plt.subplot(colums, row, i+1 ) plt.imshow(X_shuffle.iloc[i].values.reshape(28 ,28 ),interpolation='nearest' , cmap='Greys' ) plt.show() alphabets_mapper = {0 :'0' ,1 :'1' ,2 :'2' ,3 :'3' ,4 :'4' ,5 :'5' ,6 :'6' ,7 :'7' ,8 :'8' ,9 :'9' ,10 :'A' ,11 :'B' ,12 :'C' ,13 :'D' ,14 :'E' ,15 :'F' } dataset_alphabets = dataset.copy() dataset['label' ] = dataset['label' ].map (alphabets_mapper) label_size = dataset.groupby('label' ).size() label_size.plot.barh(figsize=(10 ,10 )) plt.show() X_train, X_test, y_train, y_test = train_test_split(X,y) standard_scaler = MinMaxScaler() standard_scaler.fit(X_train) X_train = standard_scaler.transform(X_train) X_test = standard_scaler.transform(X_test) print ("Data after scaler" )X_shuffle = shuffle(X_train) plt.figure(figsize = (12 ,10 )) row, colums = 4 , 4 for i in range (16 ): plt.subplot(colums, row, i+1 ) plt.imshow(X_shuffle[i].reshape(28 ,28 ),interpolation='nearest' , cmap='Greys' ) plt.show() X_train = X_train.reshape(X_train.shape[0 ], 28 , 28 , 1 ).astype('float32' ) X_test = X_test.reshape(X_test.shape[0 ], 28 , 28 , 1 ).astype('float32' ) y_train = tf.keras.utils.to_categorical(y_train) y_test = tf.keras.utils.to_categorical(y_test) from tensorflow.keras.layers import Conv2D,MaxPooling2D,Dropout,Flatten,Densecls = tf.keras.models.Sequential() cls.add(Conv2D(32 , (5 , 5 ), input_shape=(28 , 28 , 1 ), activation='relu' )) cls.add(MaxPooling2D(pool_size=(2 , 2 ))) cls.add(Dropout(0.3 )) cls.add(Flatten()) cls.add(Dense(128 , activation='relu' )) cls.add(Dense(64 , activation='relu' )) cls.add(Dense(len (y.unique()), activation='softmax' )) cls.compile (loss='categorical_crossentropy' , optimizer='adam' , metrics= ['accuracy' ]) history = cls.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=5 , batch_size=200 , verbose=2 ) scores = cls.evaluate(X_test,y_test, verbose=0 ) print ("CNN Score:" ,scores[1 ])plt.plot(history.history['loss' ]) plt.plot(history.history['val_loss' ]) plt.title('Model loss' ) plt.ylabel('Loss' ) plt.xlabel('Epoch' ) plt.legend(['Train' , 'Test' ], loc='upper left' ) plt.show() cls.save('my_Hex_full_model_2.h5' ) import numpy as npimport pandas as pdimport matplotlib.pyplot as pltimport tensorflow as tfimport seaborn as snsimport osfrom sklearn.preprocessing import MinMaxScalerfrom sklearn.model_selection import train_test_splitsns.set () gpus = tf.config.experimental.list_physical_devices(device_type='GPU' ) for gpu in gpus: tf.config.experimental.set_memory_growth(gpu, True ) os.environ['CUDA_VISIBLE_DEVICES' ]='0' dataset_path = "full_Hex.csv" dataset = pd.read_csv(dataset_path).astype('float32' ) X = dataset.drop('label' ,axis = 1 ) y = dataset['label' ] print ("shape:" ,X.shape)print ("culoms count:" ,len (X.iloc[1 ]))print ("784 = 28X28" )from sklearn.utils import shuffleX_shuffle = shuffle(X) plt.figure(figsize = (12 ,10 )) row, colums = 4 , 4 for i in range (16 ): plt.subplot(colums, row, i+1 ) plt.imshow(X_shuffle.iloc[i].values.reshape(28 ,28 ),interpolation='nearest' , cmap='Greys' ) plt.show() alphabets_mapper = {0 :'0' ,1 :'1' ,2 :'2' ,3 :'3' ,4 :'4' ,5 :'5' ,6 :'6' ,7 :'7' ,8 :'8' ,9 :'9' ,10 :'A' ,11 :'B' ,12 :'C' ,13 :'D' ,14 :'E' ,15 :'F' } dataset_alphabets = dataset.copy() dataset['label' ] = dataset['label' ].map (alphabets_mapper) label_size = dataset.groupby('label' ).size() label_size.plot.barh(figsize=(10 ,10 )) plt.show() X_train, X_test, y_train, y_test = train_test_split(X,y) standard_scaler = MinMaxScaler() standard_scaler.fit(X_train) X_train = standard_scaler.transform(X_train) X_test = standard_scaler.transform(X_test) print ("Data after scaler" )X_shuffle = shuffle(X_train) plt.figure(figsize = (12 ,10 )) row, colums = 4 , 4 for i in range (16 ): plt.subplot(colums, row, i+1 ) plt.imshow(X_shuffle[i].reshape(28 ,28 ),interpolation='nearest' , cmap='Greys' ) plt.show() X_train = X_train.reshape(X_train.shape[0 ], 28 , 28 , 1 ).astype('float32' ) X_test = X_test.reshape(X_test.shape[0 ], 28 , 28 , 1 ).astype('float32' ) y_train = tf.keras.utils.to_categorical(y_train) y_test = tf.keras.utils.to_categorical(y_test) from tensorflow.keras.layers import Conv2D,MaxPooling2D,Dropout,Flatten,Densecls = tf.keras.models.Sequential() cls.add(Conv2D(32 , (5 , 5 ), input_shape=(28 , 28 , 1 ), activation='relu' )) cls.add(MaxPooling2D(pool_size=(2 , 2 ))) cls.add(Dropout(0.3 )) cls.add(Flatten()) cls.add(Dense(128 , activation='relu' )) cls.add(Dense(64 , activation='relu' )) cls.add(Dense(len (y.unique()), activation='softmax' )) cls.compile (loss='categorical_crossentropy' , optimizer='adam' , metrics= ['accuracy' ]) history = cls.fit(X_train, y_train, validation_data=(X_test, y_test), epochs=5 , batch_size=200 , verbose=2 ) scores = cls.evaluate(X_test,y_test, verbose=0 ) print ("CNN Score:" ,scores[1 ])plt.plot(history.history['loss' ]) plt.plot(history.history['val_loss' ]) plt.title('Model loss' ) plt.ylabel('Loss' ) plt.xlabel('Epoch' ) plt.legend(['Train' , 'Test' ], loc='upper left' ) plt.show() cls.save('my_Hex_full_model_2.h5' )

然后利⽤训练出来的模型识别压缩包⾥的⽂件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 import pandas as pdimport matplotlib.pyplot as pltimport numpy as npimport tensorflow as tfimport tensorflow.keras as kerasimport osalphabets_mapper = {0 :'0' ,1 :'1' ,2 :'2' ,3 :'3' ,4 :'4' ,5 :'5' ,6 :'6' ,7 :'7' ,8 :'8' ,9 :'9' ,10 :'a' ,11 :'b' ,12 :' c' ,13 :'d' ,14 :'e' ,15 :'f' }gpus = tf.config.experimental.list_physical_devices(device_type='GPU' ) for gpu in gpus: tf.config.experimental.set_memory_growth(gpu, True ) os.environ['CUDA_VISIBLE_DEVICES' ]='0' model = tf.keras.models.load_model('./my_Hex_full_model_2.h5' ) imgs = [] def pre (path:str ): image_path = path image = tf.keras.preprocessing.image.load_img(image_path,color_mode="grayscale" ) input_arr = keras.preprocessing.image.img_to_array(image) image_arr = 255 -input_arr imgs.append(image_arr) total = 12272 ans = list () for i in range (total): pre(f"./png/{i} .png" ) predictions = model.predict(np.array(imgs)) t = predictions.argmax(axis=1 ) squarer = lambda t: alphabets_mapper[t] vfunc = np.vectorize(squarer) ans = vfunc(t) with open (f"result.txt" ,"w" ,encoding="utf-8" ) as e: print ('' .join(ans.tolist()),file=e)

pytorch 的写法类似,这⾥不再放出, 此模型准确率⼤概在 98% 左右,没有经过精调

Week4 Akira之瞳-1 一看题目就知道是取证了,解压出一个important_work.raw文件

直接扔进volatility

1 ./volatility -f important_work.raw imageinfo

可以看到是win7的镜像

那么用pslist查看进程列表

1 ./volatility -f important_work.raw --profile=Win7SP1x64 pslist

可以看到一个important_work进程,pid是1092

那么dump下来

1 ./volatility -f important_work.raw --profile=Win7SP1x64 memdump -p 1092 -D ./

得到1092.dmp,直接foremost

output文件夹下有个zip,备注Password is sha256(login_password)

那么我们需要找到登陆密码并进行sha256加密,直接hashdump

1 2 3 4 5 ./volatility -f important_work.raw --profile=Win7SP1x64 hashdump Volatility Foundation Volatility Framework 2.6 Administrator:500:aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0::: Guest:501:aad3b435b51404eeaad3b435b51404ee:31d6cfe0d16ae931b73c59d7e0c089c0::: Genga03:1001:aad3b435b51404eeaad3b435b51404ee:84b0d9c9f830238933e7131d60ac6436:::

首先把administrator的密码这一行扔进ophcrack,发现无密码,然后把Genga03账号这行扔进去发现解密失败了,做到这,还走了一些弯路,比如说用hivelist去查虚拟地址之类的再dump密码,其实没必要,最后直接去ophcrack的在线解密网站https://www.objectif-securite.ch/ophcrack,使用Genga03的NT-hash,也就是84b0d9c9f830238933e7131d60ac6436来解,解得密码:asdqwe123,再对其进行sha256加密,得到20504cdfddaad0b590ca53c4861edd4f5f5cf9c348c38295bd2dbf0e91bca4c3

解压得两张图片,Blind.png和src.png,一看就知道是盲水印,脚本伺候

1 python3 bwmforpy3.py decode src.png Blind.png flag.png

hgame{7he_f1ame_brin9s_me_end1ess_9rief}

Akira之瞳-2 secret_work.raw和secret.7z,volatility伺候

1 ./volatility -f secret_work.raw imageinfo

还是Win7SP1x64系统

1 ./volatility -f secret_work.raw --profile=Win7SP1x64 pslist

看到一个notepad.exe和许多chrome.exe,iehistory看了下浏览器记录,发现打开了一哥dumpme.txt

filescan后dumpfiles

1 2 3 4 ./volatility -f secret_work.raw --profile=Win7SP1x64 filescan | grep dumpme.txt Volatility Foundation Volatility Framework 2.6 0x000000007ef94820 2 0 RW-r-- \Device\HarddiskVolume1\Users\Genga03\Desktop\dumpme.txt 0x000000007f2b5f20 2 0 RW-rw- \Device\HarddiskVolume1\Users\Genga03\AppData\Roaming\Microsoft\Windows\Recent\dumpme.txt.lnk

带lnk那个是个快捷方式,不用管,地址是0x000000007ef94820

1 ./volatility -f secret_work.raw --profile=Win7SP1x64 dumpfiles -Q 0x000000007ef94820 -D ./

得到file.None.0xfffffa801aa35340.dat

丢进winhex发现

zip password is: 5trqES&P43#y&1TO

And you may need LastPass

解压得到一个空文件夹S-1-5-21-262715442-3761430816-2198621988-1001,一个container和一个Cookies,不知道有啥用,结合hint看需要lastpass,安装之后不会用,搜了一下怎么从cookies读取某个密码没啥结果,Cookies丢进winhex可以看到是SQLite文件,用SQLite查看器看不到什么东西。再往下翻有VeraCrypt的字眼,那应该是container就是VeraCrypt加密过的文件,key就通过lastpass和cookies得到。就卡在这了,等待wp。

看了官方wp之后才知道用的是volatility的lastpass插件,结合关键词其实应该用”lasspass 内存取证”这种组合来搜,一下子就能得到相关文章,进而应该能很顺利的解出这道题。真是太可惜了。不过还是学习了mimikatz的使用,毕竟之前没接触过。

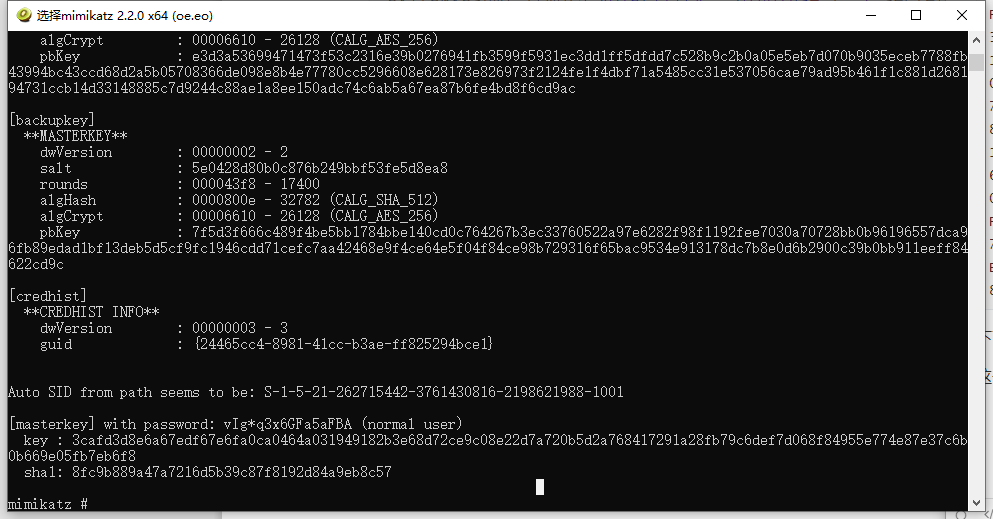

使用mimikatz解密cookies,要找到登陆密码,用volatility的lastpass插件(这个插件我装它和库前前后后磨蹭了两个小时,为了使用插件把之前的纯净可执行volatility改成py文件模式了)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 vol.py -f secret_work.raw --profile=Win7SP1x64 lastpass Searching for LastPass Signatures Found pattern in Process: chrome.exe (3948) Found pattern in Process: chrome.exe (3948) Found pattern in Process: chrome.exe (3948) Found pattern in Process: chrome.exe (3948) Found pattern in Process: chrome.exe (2916) Found pattern in Process: chrome.exe (2916) Found pattern in Process: chrome.exe (2916) Found pattern in Process: chrome.exe (2916) Found pattern in Process: chrome.exe (2916) Found pattern in Process: chrome.exe (1160) Found pattern in Process: chrome.exe (1160) Found pattern in Process: chrome.exe (1160) Found pattern in Process: chrome.exe (1160) Found LastPass Entry for live.com UserName: windows login & miscrosoft Pasword: Unknown Found LastPass Entry for live.com,bing.com,hotmail.com,live.com,microsoft.com,msn.com,windows.com,windowsazure.com,office.com,skype.com,azure.com UserName: windows login & miscrosoft Pasword: vIg*q3x6GFa5aFBA Found Private Key LastPassPrivateKey<308204BB020100300D06092A864886F70D0101010500048204A5308204A10201000282010100BF794F57D296731F67FD1007BEB13A7732DE75CEB688A0A0B8A4C9DE5D0757E83F9CE8EED14346977C72C65F2C2834F150D9FB54086531896CDEFD6D8F4A5CCA2D39E0ADCB24AA6EE075579E9C6631588E9474F6B91B9D1D4D23E55442FA4E89D6810A764CCCEB224DB045DE8E9B17D3A0E561F96D4F414E775A76EA74031AB0EDAB640D1D5FFB8B83F7F7F0CA2D415F9E68CB9DB1AB6028012724AE5674FCC5C0C6085FD2A5C39E785E36C899166120893095779104A123090681914834E063FD433E0F54A221BFA6B344F76B270D1FB5FBC5A7385911A0222A65FD7FDA3573F1A9C8C8B75003664DC998FB6BAB048D65F0A44A23E1446E299A4323280A13ED020111028201000B435F052A815210E7FFD3C43864C734302B341B37E9EB54BF91390D1487F61CB872A44A488B7C9F7FCA8423B74DA8C2E6A369230F8D7B626FD0E1BB268BE7572FD63A64937AA09D1C43234590BAB79BCC26D9B429019FD48C112B9B8B7822BCD061F18E7CFCFEC5C855A9C1CC273DA30976E7A542AA4F22BBBA06FEBB87B6468A44BD7E57DA570AB63E1A013AD75AC3B6B3927D274769E4774B7DC66DC10CA337465A39221C062B9B96BF4E8BF484C3F171A40E41B6D32FC417E0A54EFEE8896346947F7CB40B382F2D8AB78D6CD040570FAC76C0497CC3A677B884B6208157E482D42B0CD675C7F52F50AAA221C076F2604475B4A3F766B9B0103DA11633ED02818100FE8270E2DD0E11837ECDE3E61EED958F59F0FC906A46082A9C38ED503968174F233CC4A7E95F1DF125CEDAAF56A374B986883CFD803FCE883378DCBB43EBDBB631E6069D3151572368206134BB850E3B47638C8E5CB4F4A742D30D87876BB76ACEEA9A0EEB6BB5301A5E730C976F660693BA37E9A73F66140F3EE3E6058687B702818100C0985DC66AD22251EB0A59F5C2F2A4D1228B14BDABA74FD178EADD30D33B0E9FF1DD45ECA56A3CC7FD8CA7E1F7361B63FA1C7387B3A0CC6ECFF7B9DBC55B938E33AD5AFADB5C0BE11C8CAD924B682A9EA68DC53616C2D3FAD16417A5E045E732F60F17DDF1A67BEEEB46CA9A0FFDD6A0B9D1E08F7DBE7087C5AA4B25700A197B0281801DF13A750AF298A60EEB0BC0B8582FB6830D4AE3D044796E6CBB67369D578A458BACCBD784DE0385C8367414A0C7EF9D5B1F163BF0F872A69CA4CEAC9E9437F7512A1EE55118A0D6FD30FC608E881FCABD1AC53DECC9FEAA4418D46A4C2ACA48CD0C8A9857EE8DC96C8395108A49574C116133C122BC2A207A43A2574BF1B59D0281805AA20E03051797AE14411B4679DB98DAE31445FEE75DCB3566142BDABDC1704B44A45D24119B67E5A47E6D1F0AEC491FFD3A90B85487E7BBAD2948676BEEDC06AEE82AD0673A5FF176D8CA26BA12E6E13F51C637923D90EE80A792A8698A4EAE91E8FC2C357B859D9BE5140C43C2BF5AB1CC2D70B3A4E9A94DF5C9028F13CFC102818100AAFE94334DE0035FE8673623497290B5D059E6176FB785D83A2EA157C2E3B335E2E264DC5D7EBB73E0348E7578D956F1AF59E81D9FC24FFB23A61B262184A0B06B4A0F79A750E0EFE776646CFF6ACDB2A2A4CFFBDEC64DA06F05A76A8028CC3E0D487A21C4EADA734DADEDC8280528892E07FBC98DC47B0E2ED1E69EDA479D05>LastPassPrivateKey

下面的一大串private key不用管它,注意的是Pasword: vIg*q3x6GFa5aFBA

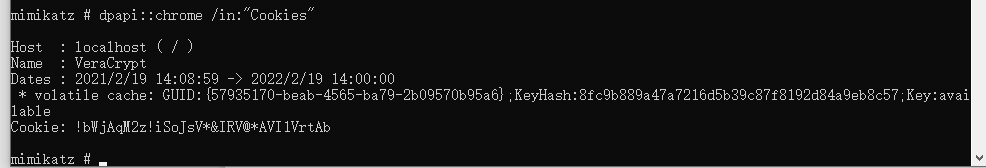

这个是用mimikatz解密cookies的密码,在kali里把mimikatz拿到windows下运行

然后用masterkey解密cookies

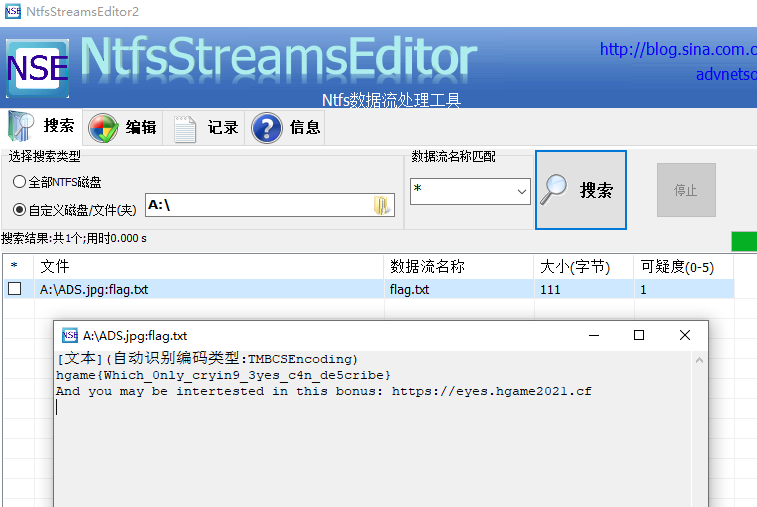

这样就得到了VeraCrpyt的密码,解开container得到ADS.jpg,用NtfsStreamsEditor扫出flag.txthgame{Which_0nly_cryin9_3yes_c4n_de5cribe}